Architecture Overview

The NVIDIA container stack is architected so that it can be targeted to support any container runtime in the ecosystem. The components of the stack include:

-

The NVIDIA Container Runtime (nvidia-container-runtime)

-

The NVIDIA Container Runtime Hook (nvidia-container-toolkit / nvidia-container-runtime-hook)

-

The NVIDIA Container Library and CLI (libnvidia-container1, nvidia-container-cli)

The components of the NVIDIA container stack are packaged as the NVIDIA Container Toolkit.

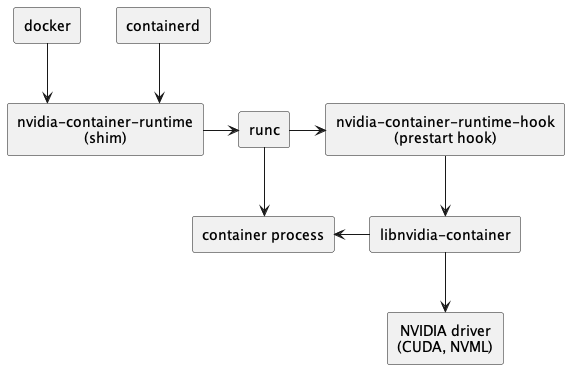

How these components are used depends on the container runtime being used. For docker or containerd, the NVIDIA Container Runtime (nvidia-container-runtime) is configured as an OCI-compliant runtime, with the flow through the various components is shown in the following diagram:

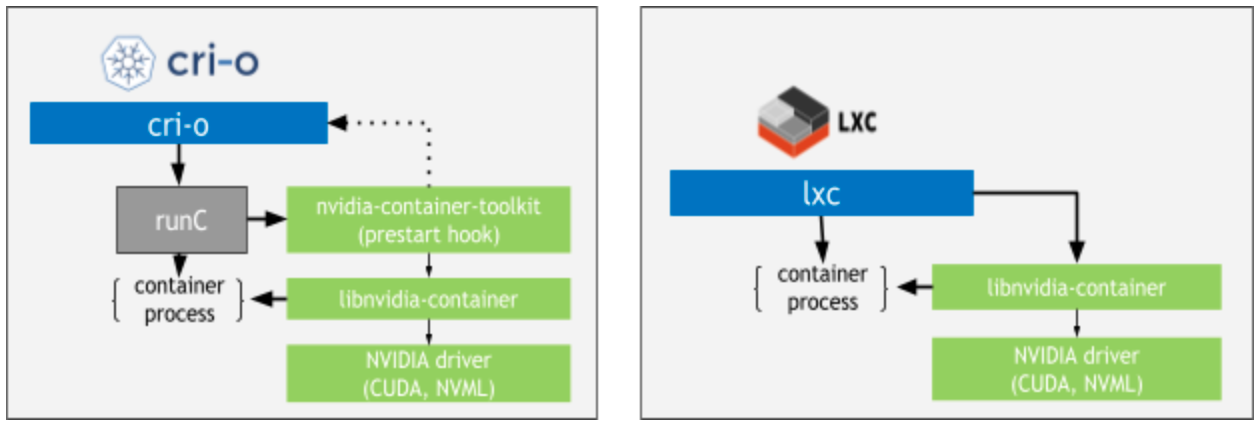

The flow through components for cri-o and lxc are shown in the following diagram. It should be noted that in this case the NVIDIA Container Runtime component is not required.

Container Creation Process

As the diagram mentioned before, the container creation process includes following steps:

- Docker CLI/ containerd creates containers.

- nvidia-container-runtime handles the container creation request, since it’s specified in docker daemon(/etc/docker/daemon.json):

"runtimes": {

"nvidia": {

"path": "/usr/bin/nvidia-container-runtime",

"runtimeArgs": []

}

}

runcis called to create the container, before the creation,nvidia-container-runtime-hookis called, since theprestart hookis configured.nvidia-container-runtime-hookcalls thenvidia-container-clito inject NVIDIA GPU support into containers.(libnvidia-containerprovides a well-defined API and a wrapper CLI callednvidia-container-cli, e.g.nvidia-container-cli --load-kmods configure --ldconfig=@/sbin/ldconfig.real --no-cgroups --utility --device 0 $(pwd))

CDI Support

What is CDI

refer to: https://github.com/cncf-tags/container-device-interface

CDI (Container Device Interface), is a specification, for container-runtimes, to support third-party devices. It provides a vendor-agnostic mechanism to make arbitrary devices accessible in containerized environments.

It introduces an abstract notion of a device as a resource. Such devices are uniquely specified by a fully-qualified name that is constructed from a vendor ID, a device class, and a name that is unique per vendor ID-device class pair.

vendor.com/class=unique_name

The combination of vendor ID and device class (vendor.com/class in the above example) is referred to as the device kind.

CDI concerns itself only with enabling containers to be device aware. Areas like resource management are explicitly left out of CDI (and are expected to be handled by the orchestrator). Because of this focus, the CDI specification is simple to implement and allows great flexibility for runtimes and orchestrators.

Why is CDI needed

- Exposing a device to a container can require exposing more than one device node, mounting files from the runtime namespace, or hiding procfs entries.

- Performing compatibility checks between the container and the device (e.g: Can this container run on this device?).

- Performing runtime-specific operations (e.g: VM vs Linux container-based runtimes).

- Performing device-specific operations (e.g: scrubbing the memory of a GPU or reconfiguring an FPGA).

We need a uniform standard for third-party devices management, or else vendors have to write and maintain multiple plugins for different runtimes or even directly contribute vendor-specific code in the runtime.

How does CDI work

- CDI file containing updates for the OCI spec in JSON format should be present in the CDI spec directory. Default directories are /etc/cdi and /var/run/cdi

- Fully qualified device name should be passed to the runtime either using command line parameters for podman or using container annotations for CRI-O and containerd

- Container runtime should be able to find the CDI file by the device name and update the container config using CDI file content.

The Container Device Interface enables the following two flows:

A. Device Installation

- A user installs a third party device driver (and third party device) on a machine.(e.g. nvidia-device-plugin)

- The device driver installation software writes a JSON file at a well known path (/etc/cdi/vendor.json).

B. Container Runtime

- A user runs a container with the argument –device followed by a device name.

- The container runtime reads the JSON file.

- The container runtime validates that the device is described in the JSON file.

- The container runtime pulls the container image.

- The container runtime generates an OCI specification.

- The container runtime transforms the OCI specification according to the instructions in the JSON file

Example of using CDI

$ mkdir /etc/cdi

$ cat > /etc/cdi/vendor.json <<EOF

{

"cdiVersion": "0.6.0",

"kind": "vendor.com/device",

"devices": [

{

"name": "myDevice",

"containerEdits": {

"deviceNodes": [

{"hostPath": "/vendor/dev/card1", "path": "/dev/card1", "type": "c", "major": 25, "minor": 25, "fileMode": 384, "permissions": "rw", "uid": 1000, "gid": 1000},

{"path": "/dev/card-render1", "type": "c", "major": 25, "minor": 25, "fileMode": 384, "permissions": "rwm", "uid": 1000, "gid": 1000}

]

}

}

],

"containerEdits": {

"env": [

"FOO=VALID_SPEC",

"BAR=BARVALUE1"

],

"deviceNodes": [

{"path": "/dev/vendorctl", "type": "b", "major": 25, "minor": 25, "fileMode": 384, "permissions": "rw", "uid": 1000, "gid": 1000}

],

"mounts": [

{"hostPath": "/bin/vendorBin", "containerPath": "/bin/vendorBin"},

{"hostPath": "/usr/lib/libVendor.so.0", "containerPath": "/usr/lib/libVendor.so.0"},

{"hostPath": "tmpfs", "containerPath": "/tmp/data", "type": "tmpfs", "options": ["nosuid","strictatime","mode=755","size=65536k"]}

],

"hooks": [

{"createContainer": {"path": "/bin/vendor-hook"} },

{"startContainer": {"path": "/usr/bin/ldconfig"} }

]

}

}

EOF

in the case of podman the CLI for accessing the device would be:

$ podman run --device vendor.com/device=myDevice ...

How does Nvidia Container Toolkit integrate with CDI in Kubernetes

To allow NVIDIA devices to be used in these environments, the NVIDIA Container Toolkit CLI includes functionality to generate a CDI specification for the available NVIDIA GPUs in a system.

A. Nvidia Device Plugin

- The nvidia-device-plugin writes a JSON file at a well known path (/etc/cdi/vendor.json).

B. Nvidia Container Runtime

- The

nvidia-container-runtimeis set tocdimode. - The

nvidia-container-runtimereads thecdiJSON file. - The

nvidia-container-runtimevalidates that the device is described in the JSON file. - The

nvidia-container-runtimegenerates an OCI specification. - The

nvidia-container-runtimetransforms the OCI specification according to the instructions in the JSON file - The

nvidia-container-runtimecallsruncto create containers.

refer to: nvidia-container-runtime.cdi