Recently, the 2021 North American KubeCon was held online. @denkensk and @yuanchen8911 are active contributors to the scheduler-plugin open source project of the Kubernetes sig-scheduling group. Brought a speech on the Capacity scheduling elastic capacity quota scheduler plug-in.

1. Background

Related proposals: KEP9: Capacity scheduling.

Source code address: Capacity scheduling

- The current Kubernetes native ResourceQuota quota mechanism is limited to a single namespace (ResourceQuota resource quota can only be configured for each namespace)

- When scheduling preemption occurs in a Pod, only the priority of its PriorityClass will be used as the criterion for whether to preempt it.

- Lack of a more flexible resource quota mechanism across namespaces

- When scheduling applications in batches, the cluster resource usage is low (some Pods occupy the namespace quota when they are created, but the scheduling fails and the resources are not actually used)

2. Function introduction

The Capacity scheduling scheduler plug-in designs a new Quota mechanism (Elastic Quota), which includes several concepts:

- max: the upper limit of resource application by Pod, the maximum resource that the entire namespace can provide

- min: the lower limit of the resource requested by the Pod. When the min resource is idle (requests + allocated <= min), the min resource will: 1. be preempted by other Quotas, 2. give priority to the preempted victim Pod.

- The resource quota buffer between “min” and “max” can tolerate Pod operation exceptions. For example, Pod creation uses the min of the entire Elastic Quota, but runs abnormally (it is not actually used). Before the max is reached, Other Pods can also be created successfully. If you use Kubernetes’ native resourceQuota, once the Pod creation usage reaches the Quota value, new Pods cannot be created.

- Allow workloads using A Elastic Quota to “borrow” (up to A’s max) unused min quotas from other B Elastic Quotas. This ensures that after the Pod of the workload is preempted (becomes a victim), it can be “borrowed” in a timely manner The idle Elastic Quota quota is recreated

3. Implementation principle

Introduction to Elastic Quota CRD

Elastic Quota CRD contains the following field structure:

// ElasticQuota sets elastic quota restrictions per namespace

type ElasticQuota struct {

metav1.TypeMeta

metav1.ObjectMeta

Spec ElasticQuotaSpec

Status ElasticQuotaStatus

}

// ElasticQuotaSpec defines the Min and Max for Quota.

type ElasticQuotaSpec struct {

Min v1.ResourceList

Max v1.ResourceList

}

// ElasticQuotaStatus defines the observed use.

type ElasticQuotaStatus struct {

Used v1.ResourceList

}

Configuration example:

apiVersion: scheduling.sigs.k8s.io/v1alpha1

kind: ElasticQuota

metadata:

name: test

namespace: test

spec:

max:

cpu: 20

memory: 40Gi

nvidia.com/gpu: 2

min:

cpu: 10

memory: 20Gi

nvidia.com/gpu: 1

The relationship between ### and Resource Quota

Resource Quota has the field “hard”, while Elastic Quota has the fields “min” and “max”. It is expected that Elastic Quota will be integrated into Resource Quota in the future:

-

hard: When the Pod is created, check whether the resources (requests) required by the Pod are within the hard configuration range through the admission admission mechanism. As long as the Pod submits a creation request, it will be counted in the hard resource occupation, so this value should be higher than all The requests value of the Pod is large and greater than or equal to the max value.

-

max: In the Prefilter phase of Pod scheduling, check whether the resources (requests) required by the Pod are within the max configuration range. Only successfully scheduled Pods will be counted in the occupation of max resources.

-

min: Quota’s minimum resource guarantee

-

min <= max <= hard

Scheduler plug-in principle

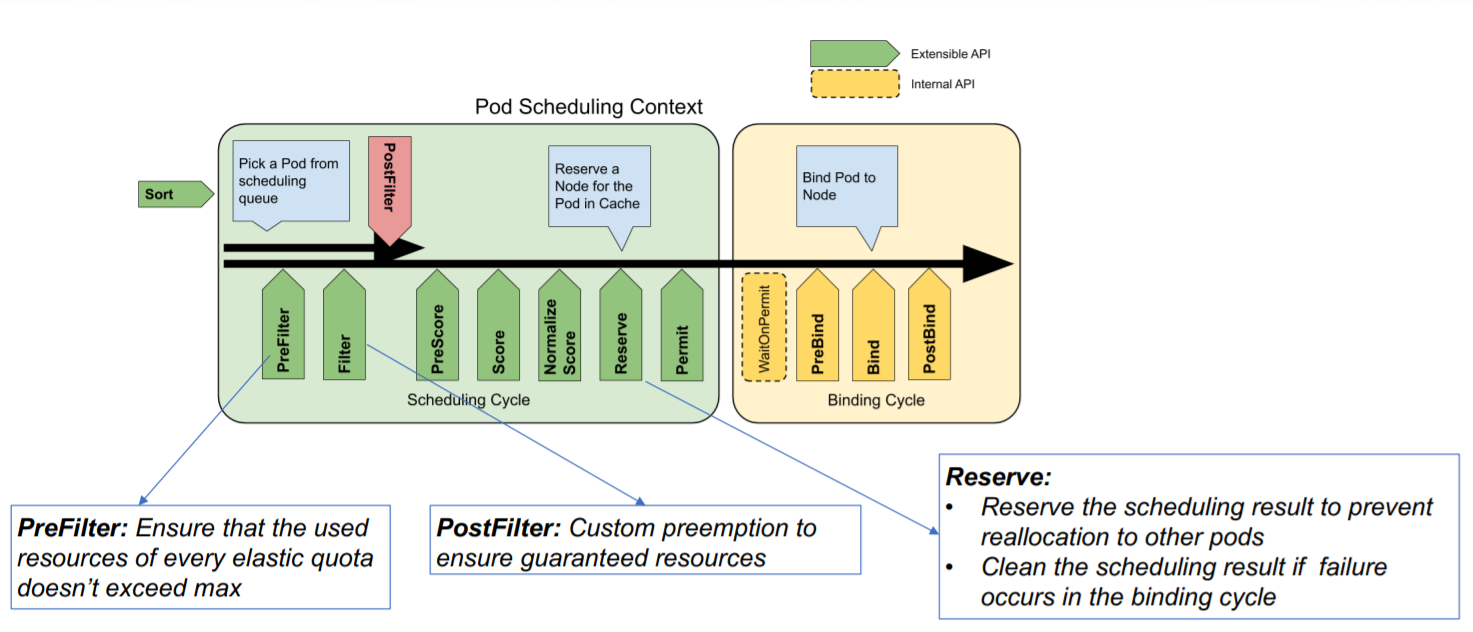

a. Scheduler extension point

-

The Prefilter stage ensures that the resources required by the Pod do not exceed the max value of Quoota

-

The Postfilter stage checks whether the minimum resource guarantee of Quoota min is reached. If it is not reached, enter the custom preemption logic ()

-

In the Reserved phase, Quota pre-reserves the resources required by the Pod for this schedule to prevent the Pod from reusing Quota in the next scheduling cycle. If the binding between the Pod and the node fails during the Bind phase, the pre-reserved Quota will be released.

b. Scheduling example

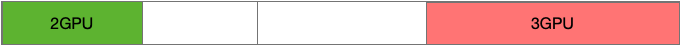

We assume that the total GPU capacity of the current cluster is 10, and there are QuotaA (min:4, max:6) and QuotaB (min:6, max:8) in the cluster.

Initially, the usage of QuotaA and QuotaB did not reach min

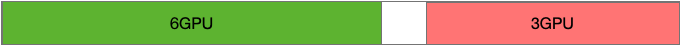

At this time, the user creates a Workload and allows QuotaA to reach min. At this time, QuotaB still has not reached min (is idle), and the cluster has 10 - 4 - 3 = 3 GPU allocations left.

At this time, if the user wants to continue to use the remaining 3 GPUs in the cluster, it is found that up to 2 can be used (reaching the max of QuotaA). This is equivalent to QuotaA “borrowing” resources from the min of QuotaB.

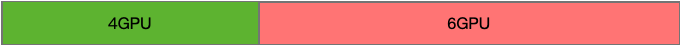

At this time, if the user creates a workload and wants to use the quota of QuotaB, priority will be given to allowing the Pod to use the min that reaches QuotaB. At this time, the resources originally “borrowed” by QuotaA will be returned to QuotaB (through preemption), and eventually both Quotas are satisfied. min is the minimum guaranteed resource usage.

3. User Manual

Scheduler plug-in configuration example:

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

leaderElection:

leaderElect: false

clientConnection:

kubeconfig: "REPLACE_ME_WITH_KUBE_CONFIG_PATH"

profiles:

- schedulerName: default-scheduler

plugins:

preFilter:

enabled:

- name: CapacityScheduling

postFilter:

enabled:

- name: CapacityScheduling

disabled:

- name: "*"

reserve:

enabled:

- name: CapacityScheduling

pluginConfig:

- name: CapacityScheduling

args:

kubeConfigPath: "REPLACE_ME_WITH_KUBE_CONFIG_PATH"

Configure Elastic Quota:

apiVersion: scheduling.sigs.k8s.io/v1alpha1

kind: ElasticQuota

metadata:

name: quota1

namespace: quota1

spec:

max:

cpu: 2

min:

cpu: 0

---

apiVersion: scheduling.sigs.k8s.io/v1alpha1

kind: ElasticQuota

metadata:

name: quota2

namespace: quota2

spec:

max:

cpu: 2

min:

cpu: 0

Configure Pod Priority Class:

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1000000

globalDefault: false

description: "Sample High Priority Class"