I. Introduction

Friends who follow the Kubernetes Scheduler SIG (Special Interest Group) should know that the recently released Kubernetes In version 1.19, Scheduler Framework replaces the original Schduler working mode and officially provides the scheduler to users in a plug-in form. Compared with the “four-piece set” of the old version of the scheduler: Predicate, Priority, Bind, and Preemption. The new version of the scheduler framework is more flexible and introduces a total of 11 extension points. Users can assemble and expand the order of execution of the scheduling algorithm in the scheduling plug-in at will. Refer to the official detailed design ideas: 624-scheduling-framework

2. Impact of plugin

In the implementation of the default scheduler default-scheduler in older versions, if we want to modify and configure the scheduling algorithm order and weight of the scheduler, we often need to modify the scheduler configuration file:

apiVersion: v1

kind: Policy

predicate: # Define predicate algorithm

- name: NoVolumeZoneConflict

- name: MaxEBSVolumeCount

- ...

priorities: # Define priorities algorithm and weights

- name: SelecttorSpreadPriority

weight: 1

- name: InterPodAffinityPriority

weight: 1

- ...

extenders: # Define the extended scheduler service

- urlPrefix: "http://xxxx/xxx"

filterVerb: filter

prioritizeVerb: prioritize

enableHttps: false

nodeCacheCapable: false

ignorable: true

This configuration method is not flexible enough. The logic of the scheduler executing the scheduling algorithm is strictly executed in the order of predicate – priorities – extenders. We cannot add custom logic between the scheduling algorithm and the algorithm. When we expand custom scheduling logic, the custom scheduling algorithm can only be executed after predicate and priorities. Therefore, this scheduler configuration method and expansion mechanism can no longer meet our needs. Kuberntes officials are also slowly incorporating this The scheduler implementation is eliminated and replaced by the plug-in Scheduler Framework. Different from the configuration method of the old version of the scheduler, the new version of the scheduler has added 11 extension plug-ins to the original “four-piece set”:

- Queue sort Prioritize Pods to be scheduled

- PreFilter Condition check before Pod scheduling. If the check fails, the scheduling cycle will be ended directly (pods with certain labels and annotations will be filtered)

- Filter Filter out Nodes that do not meet the current Pod running conditions (equivalent to the old version of predicate)

- PostFilter Executed after the Filter plug-in is executed (generally used for processing Pod preemption logic)

- PreScore Status processing before scoring

- Scoring Score nodes (equivalent to priorities in older versions)

- Reserve

- Permit Admission control before Pod binding

- PreBind The logic before binding the Pod, such as: pre-mount the shared storage first and check whether it is mounted normally

- Bind Node and Pod binding

- PostBind Resource cleanup logic after successful Pod binding

Compared with the webhook-based extension mechanism (Scheduler Extender) of the old version of the scheduler, the Scheduler Framework uses binary compilation of a custom scheduler to replace the original scheduler. This is mainly done to solve some problems existing in the old version of the scheduler extension mechanism:

- Scheduling algorithm execution timing is inflexible The extended predicate and priority algorithms can only be executed after the scheduling algorithm of the default scheduler.

- Serialization and deserialization are slow The extended scheduler uses HTTP+JSON for serialization and deserialization.

- After calling the extended scheduler exception, the scheduling task of the current Pod is directly discarded A notification mechanism is needed to make the scheduler aware of extended scheduler exceptions.

- Unable to share cache of default scheduler (because extended scheduler and default scheduler are in different processes)

3. How to configure the Scheduler Framework plug-in

There is not much difference between the new version of Scheduler Framework and the old version. If you use kubeadm to install the cluster, the scheduler runs in the cluster as a static Pod by default. If we need to adjust the configuration of the scheduler, modify the scheduler configuration KubeSchedulerConfiguration. Can:

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: xxx

profiles:

- plugins:

queueSort:

enabled:

- name: "*"

preFilter:

enabled:

- name: "*"

filter:

enabled:

- name: "*"

preScore:

enabled:

- name: "*"

score:

enabled:

- name: "*"

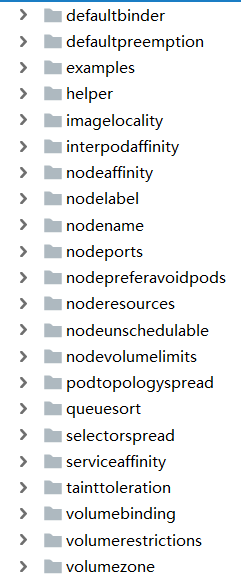

Use the * wildcard to use all provided scheduler implementations of this scheduler. In the source code of 1.19, the pkg/scheduler/framework/plugins directory provides various implementations of the built-in scheduling algorithm for the Kubernetes scheduler:

If you need to disable a certain scheduling algorithm of the scheduler, you only need to disable it under the corresponding plugin in the KubeSchedulerConfiguration configuration.

If you need to disable a certain scheduling algorithm of the scheduler, you only need to disable it under the corresponding plugin in the KubeSchedulerConfiguration configuration.

4. How to secondary develop and use a customized Scheduler Framework plug-in

When we need to add custom scheduler logic, we directly compile the custom scheduler into a binary, package it into an image and run it in the cluster, replacing the default scheduler (replacing the default scheduler by modifying the schedulerName field of the Pod), and at the same time Modify the scheduler configuration of Scheduler Framework. For example, we have customized a scheduler called Coscheduling, which extends the queueSort, preFilter, permit, reserve, and postBind plug-ins. We need to configure it as follows:

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: xxx

profiles:

- plugins:

queueSort:

enabled:

- name: Coscheduling

disabled:

- name: "*"

preFilter:

enabled:

- name: Coscheduling

disabled:

- name: "*"

filter:

disabled:

- name: "*"

preScore:

disabled:

- name: "*"

score:

disabled:

- name: "*"

permit:

enabled:

- name: Coscheduling

reserve:

enabled:

- name: Coscheduling

postBind:

enabled:

- name: Coscheduling

pluginConfig:

- name: Coscheduling

args:

permitWaitingTimeSeconds: 10

kubeConfigPath: "%s"

So what is the process of secondary development of a custom scheduler? The official interface is provided for each plug-in. We only need to implement these interfaces in our own scheduler:

// pkg/scheduler/framework/v1alpha1/interface.go

type QueueSortPlugin interface {

Plugin

Less(*QueuedPodInfo, *QueuedPodInfo) bool

}

type PreFilterExtensions interface {

AddPod(ctx context.Context, state *CycleState, podToSchedule *v1.Pod, podToAdd *v1.Pod, nodeInfo *NodeInfo) *Status

RemovePod(ctx context.Context, state *CycleState, podToSchedule *v1.Pod, podToRemove *v1.Pod, nodeInfo *NodeInfo) *Status

}

type PreFilterPlugin interface {

Plugin

PreFilter(ctx context.Context, state *CycleState, p *v1.Pod) *Status

PreFilterExtensions() PreFilterExtensions

}

type FilterPlugin interface {

Plugin

Filter(ctx context.Context, state *CycleState, pod *v1.Pod, nodeInfo *NodeInfo) *Status

}

type PostFilterPlugin interface {

Plugin

PostFilter(ctx context.Context, state *CycleState, pod *v1.Pod, filteredNodeStatusMap NodeToStatusMap) (*PostFilterResult, *Status)

}

type PreScorePlugin interface {

Plugin

PreScore(ctx context.Context, state *CycleState, pod *v1.Pod, nodes []*v1.Node) *Status

}

type ScoreExtensions interface {

NormalizeScore(ctx context.Context, state *CycleState, p *v1.Pod, scores NodeScoreList) *Status

}

type ScorePlugin interface {

Plugin

Score(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string) (int64, *Status)

ScoreExtensions() ScoreExtensions

}

type ReservePlugin interface {

Plugin

Reserve(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string) *Status

Unreserve(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string)

}

type PreBindPlugin interface {

Plugin

PreBind(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string) *Status

}

type PostBindPlugin interface {

Plugin

PostBind(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string)

}

type PermitPlugin interface {

Plugin

Permit(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string) (*Status, time.Duration)

}

type BindPlugin interface {

Plugin

Bind(ctx context.Context, state *CycleState, p *v1.Pod, nodeName string) *Status

}

For specific implementation code, please refer to the official sample provided by Kubernetes: scheduler-plugins

5. Summary

The time span from the official Kubernetes proposal to restructure Scheduler to the official launch of the new version of Scheduler Framework was about more than a year. Thanks to the high activity and participation of the Kubernetes community, the reconstruction work was successfully completed. However, even though the scheduler framework has been reconstructed, the existing scheduler algorithms are not perfect and cannot fully meet the various scheduling needs of cluster container orchestration, such as real load-based scheduling algorithms and GPU-based scheduling algorithms. Wait, it still needs to be improved in the future. Speaking of the scheduler, I have to mention another open source project of Kubernetes: Descheduler (anti-scheduler). Its function is to dynamically adjust the scheduling results of completed scheduling Pods. I will talk about its implementation in detail next time. Principles and application scenarios.