1. How to calculate resources in kubernetes

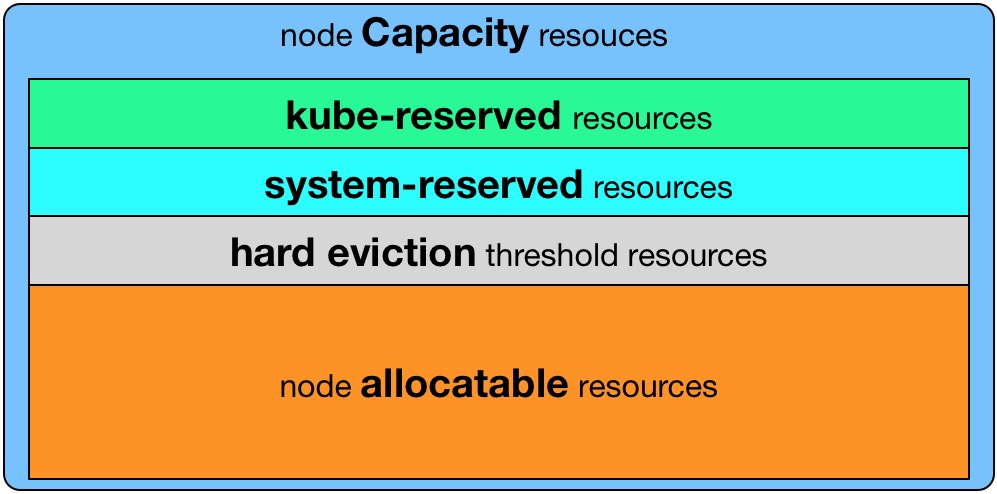

In Kubernetes, allocatableKubelet is used through nodes. Node Allocatable is used to reserve resources for Kube components and System processes, thereby ensuring that Kube and System processes have sufficient resources when the nodes are fully loaded.

Calculation method of resources that a node can allocate to a pod: Node Allocatable Resource = Node Capacity(Total resource) - Kube-reserved(reserved by kubelet) - system-reserved(reserved by system) - eviction-threshold(eviction threshold set by user)

No matter how the Pod consumes resources, it will not exceed the allocated Node Allocable Resource. Once exceeded, the Pod will trigger the eviction policy.

2. Configuration method of resource reservation in kubernetes

In the startup parameters of kubelet, you can configure the resource reservation related parameters of kubernetes:

-

–enforce-node-allocatable, the default is pods. To reserve resources for kube components and System processes, you need to set it to pods, kube-reserved, system-reserve.

-

–cgroups-per-qos, Enabling QoS and Pod level cgroups, enabled by default. Once enabled, kubelet will manage the cgroups of all workload Pods.

-

–cgroup-driver, the default is cgroupfs, the other option is systemd. Depending on the cgroup driver used by the container runtime, kubelet is consistent with it. For example, if you configure docker to use systemd cgroup driver, then kubelet also needs to configure –cgroup-driver=systemd, otherwise kubelet will report an error when starting.

-

–kube-reserved, used to configure the amount of resources reserved for kube components (kubelet, kube-proxy, dockerd, etc.), such as -kube-reserved=cpu=1000m, memory=8Gi, ephemeral-storage=16Gi.

-

–kube-reserved-cgroup, if you set –kube-reserved, be sure to set the corresponding cgroup, and the cgroup directory must be created in advance (/sys/fs/cgroup/{subsystem}/kubelet. slice), otherwise the kubelet will not be automatically created, causing the kubelet to fail to start. For example, if it is set to kube-reserved-cgroup=/kubelet.slice, you need to create the kubelet.slice directory in the root directory of each subsystem.

-

–system-reserved, used to configure the amount of resources reserved for the System process, such as –system-reserved=cpu=500m, memory=4Gi, ephemeral-storage=4Gi.

-

–system-reserved-cgroup, if you set –system-reserved, then be sure to set the corresponding cgroup, and the cgroup directory must be created in advance (/sys/fs/cgroup/{subsystem}/system. slice), otherwise the kubelet will not be automatically created, causing the kubelet to fail to start. For example, if it is set to system-reserved-cgroup=/system.slice, the system.slice directory needs to be created in the root directory of each subsystem.

-

–eviction-hard, used to configure the hard eviction conditions of kubelet, only supports two incompressible resources: memory and ephemeral-storage. When MemoryPressure occurs, the Scheduler will not schedule new Best-Effort QoS Pods to this node. When DiskPressure occurs, the Scheduler will not schedule any new Pods to this node.

-

Here are some examples:

kubelet startup args:

--enforce-node-allocatable=pods,kube-reserved,system-reserved

--kube-reserved-cgroup=/kubelet.slice

--system-reserved-cgroup=/system.slice

--kube-reserved=cpu=500m,memory=1Gi

--system-reserved=cpu=500m,memory=1Gi

--eviction-hard=memory.available<500Mi,nodefs.available<10%

A 4-core 32G node calculates allocable resources: NodeAllocatable = NodeCapacity - Kube-reserved - system-reserved - eviction-threshold Allocatable cpu = 4 - 0.5 - 0.5 = 3 Allocable memory = 32 - 1 - 1 - 0.5 = 29.5Gi

3. The underlying implementation of resource reservation

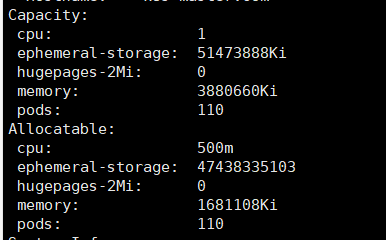

The resource reservation of kubernetes is similar to that of docker. The bottom layer is implemented through the Cgroup mechanism. When setting the –kube-reserved and –system-reserved startup parameters, kubelet will calculate the maximum number of resources that can be allocated to the Pod, which is NodeAllocatable , and then create corresponding Cgroup rules in the node system. For example, I set the sizes of kubeReserved and systemReserved in the kubelet startup configuration:

kubeReserved:

memory: 1Gi

cpu: 500m

systemReserved:

memory: 1Gi

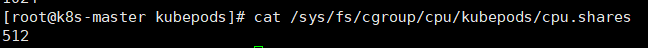

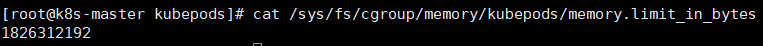

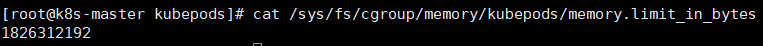

After kubelet is started, resource quotas will be created in the Cgroups of kubepods (1-core 4G machine):

cpu: 512 = 500 * 1024/1000

Memory: 1826312192 = 3880660 * 1024 - 2048 * 1024 * 1024

Memory: 1826312192 = 3880660 * 1024 - 2048 * 1024 * 1024

In this way, although the restriction on pod resources is achieved, the system and kube components will not be unable to run normally because the pod takes up too much resources, but the so-called kubeReserved and systemReserved (the resources used by kube components and the system are not restricted) are not truly implemented. It may affect the normal operation of the node’s pod).

Therefore, to truly implement kubeReserved and systemReserved, you need to add the –kube-reserved-cgroup and –system-reserved-cgroup parameters to create corresponding cgroups for kube components and systems.

I added the kubeReservedCgroup, systemReservedCgroup and enforceNodeAllocatable parameters to the kubelet startup configuration:

In this way, although the restriction on pod resources is achieved, the system and kube components will not be unable to run normally because the pod takes up too much resources, but the so-called kubeReserved and systemReserved (the resources used by kube components and the system are not restricted) are not truly implemented. It may affect the normal operation of the node’s pod).

Therefore, to truly implement kubeReserved and systemReserved, you need to add the –kube-reserved-cgroup and –system-reserved-cgroup parameters to create corresponding cgroups for kube components and systems.

I added the kubeReservedCgroup, systemReservedCgroup and enforceNodeAllocatable parameters to the kubelet startup configuration:

enforceNodeAllocatable:

- "pods"

- "kube-reserved"

- "system-reserved"

kubeReservedCgroup: /runtime.slice

systemReservedCgroup: /system.slice

kubereserved:

memory: 1Gi

cpu: 500m

systemReserved:

memory: 1Gi

Note: Regarding system.slice of systemReservedCgroup, there is no system.slice directory by default under /sys/fs/cgroup/cpuset/ and /sys/fs/cgroup/hugetlb/. You need to create the directory manually. The runtime.slice of kubeReservedCgroup is not created under all resources (cpu, memory, etc.) in /sys/fs/cgroup/, and the directory needs to be created manually. If you do not perform these two steps, the cgroup creation will fail.

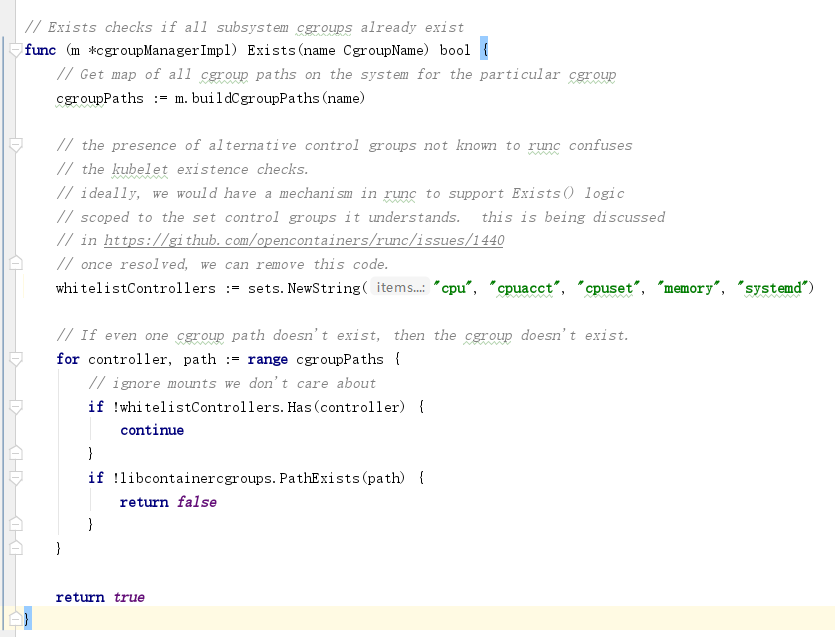

Source code logic for kubelet to check whether cgroup is created (pkg/kubelet/cm/cgroup_manager_linux.go):

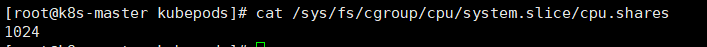

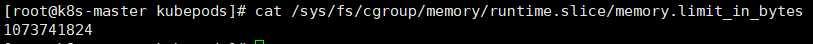

After kubelet is started, resource quotas will be created in the Cgroups of /runtime.slice and /system.slice (1-core 4G machine):

After kubelet is started, resource quotas will be created in the Cgroups of /runtime.slice and /system.slice (1-core 4G machine):

Kube components retain cpu weight:

system retains cpu weight:

system retains cpu weight:

Kube components reserve memory:

Kube components reserve memory:

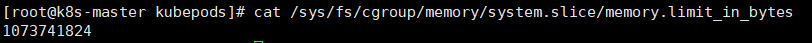

system reserved memory:

system reserved memory:

4. Officially recommended cgroup settings

Reference: [https://github.com/kubernetes/community/blob/master/contributors/design-proposals/node/node-allocatable.md#recommended-cgroups-setup](https://github.com/kubernetes/ community/blob/master/contributors/design-proposals/node/node-allocatable.md#recommended-cgroups-setup) Users are advised to be extremely careful when executing SystemReserved as this may result in critical services being CPU starved or OOM killed on the node. It is recommended to only enforce SystemReserved when the user has exhaustively profiled their node to arrive at an accurate estimate. Therefore, we generally do not recommend configuring systemReservedCgroup in production to avoid the risk of system OOM.